The History and Future of Web Mining

It all started when someone with the sniffles got on the computer and googled 'flu'.

The folks over at Google then compared billions of flu-related search queries (also known as 'search trends') to actual flu time series and used the best matches to create Google Flu Trends. By aggregating Google search data, Google Flu Trends can find certain terms that are fairly good indicators of flu activity, and then then track such activity through time and space.

The project was the first of its kind. Not only did the approach garner a publication in Nature, but it was expanded to predict outbreaks of other diseases, and was later applied to economic applications such as unemployment forecasting. Popular Google Apps such as Trends, Insights and Correlate quickly followed.

Web Mining (or Web Scraping as it is sometimes called) has come a long way since Google Flu Trends. New technologies, data strategies, and communication flows present an an exciting opportunity for scholars in the Humanities and Social Sciences – both quantitative and qualitative in orientation - who wish to harness the power of the web.

Krzyzstof Pelc, Professor of Political Science at McGill, is one such scholar using the web to power his research. He and his coauthors* are using Facebook data to gauge the effects of economic hard times on attitudes towards immigration. In an email message, Pelc explains what attracted him to Facebook for this project: “Facebook offers the advantage of systematic data on an individual's location, often their education level, and most importantly, their favourite 1980s records. In other words, we get a whole layer of data that is missing in Twitter on individuals' characteristics. When it comes to attitudes on immigration, that stuff is key.”

So how does web-mining actually work?

At the root of these approaches is the API, which I blogged about a few weeks ago in this forum. In brief, APIs allow developers to access large data streams such as those controlled by Amazon or Facebook, and to reconstruct them for various purposes that do not necessarily follow the intentions of the original data source. These data reconstructions are called 'mashups', and often combine data to create an aggregation, visualization, or novel application from two or more data sources.

In order to see the real potential of mashups, it is useful to observe a few examples.

The most common type of mashup is innovative mapping, made possible by the Google Maps API. For example, in the Access Denied Map, users can see censorship efforts targeting online social networking communities around the world. Each marker highlights a specific country barring access to major websites, as well as details that include text, images, or video describing the censorship and efforts to combat it.

Map mashups can also be collaborative, as illustrated by Ushahidi Kenya, which allows users to post acts of violence following the 2007 Election in Kenya. Data from users are then aggregated in order to present where the most violence is taking place.

Mashups are particularly useful for combining multiple data sets and layering them, as illustrated by the Deprivation Mapped application. Once again, Google is spearheading the technologies to combine data sets with Fusion Tables.

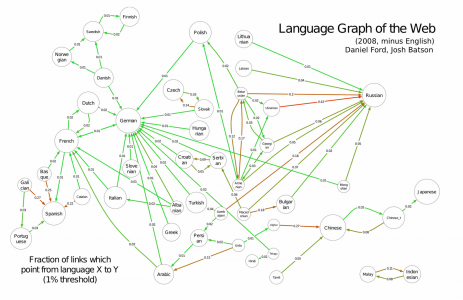

Besides mapping, mashups are also helpful to demonstrate relationships and network effects. The Unfluence mashup utilizes the “FollowTheMoney” API to track campaign donations, while the Follow the Oil mashup tracks the flow of oil money in U.S. Politics.

Before I lose all of my Humanities readers, I should note that mashups are not just useful for Political Scientists. In fact, one of the most talked-about applications of web mining involve research in philosophy, art, language, and culture.

The pinnacle example is Google Books Ngram Viewer, a visualization tool that lets you graph and compare phrases from Google Books datasets over time, showing how their usage has waxed and waned over the centuries. As more of the world's literature gets online, this tool will almost certainly be of interest to scholars wishing to complement their qualitative work with quantitative content analysis.

-

Although mashups and API tools provide extraordinary tools for web mining, it is a separate question entirely whether scholars will exploit these tools for their own research. Unfortunately, a number of hurdles exist between code and conference.

The first is data availability. While most major social networking sites now have APIs, some are simply more useful than others. Facebook's API, for instance, only allows developers to access 'public' profiles, meaning profiles with no privacy settings already attached. Obviously this is for security reasons, but it still constitutes a major road block to scholars who wish to harness the enormous potential Facebook provides vis-a-vis web mining. (Then again, it is amazing what Facebook users will post publicly.)

Other sites have no APIs whatsoever. OKCupid, for instance, made a name for itself in the online dating world through its popular OKTrends blog, which web mines its own data to provide amusing statistical findings on the world of dating and relationships. But no API exists that would allow third parties such as scholars to access the raw data and produce potentially better findings.

The second major problem is usability and digital divides, which to my knowledge has been grossly under-discussed in both academic and technological communities alike. The reality of the situation is that professional web developers – not scholars – are the ones that are building these platforms, writing the APIs, and creating the mashups. Most Humanities scholars who wish to create their own web mining application simply do not have the skills to do so, and are not adequately pressuring web developers to keep their interests in mind when writing the code. As a result, most APIs and mashups are oriented towards the needs of advertisers, firms, and spammers – not scholars.

There is hope, however, that the future of web mining will be more amenable to scholars, including those in the Humanities and Social Science. Not only are the tools becoming easier to use, but there available data is growing exponentially. In fact, the President's Council now recommends open data for all Federal Agencies, as well as data that is in machine-readable format. Scholars, start your mashups.

*Yonatan Lupu, University of California - San Diego; Robert Bond, University of California - San Diego; Christopher Fariss, University of California - San Diego; Jason Jones, University of California - San Diego; Jaime Settle, University of California - San Diego; James H. Fowler, University of California - San Diego; Cameron Marlow, Facebook